Alpha max plus beta min algorithm

From Wikipedia, the free encyclopedia

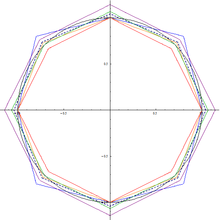

High-speed approximation of the square root of the sum of two squares

The alpha max plus beta min algorithm is a high-speed approximation of the square root of the sum of two squares. The square root of the sum of two squares, also known as Pythagorean addition, is a useful function, because it finds the hypotenuse of a right triangle given the two side lengths, the norm of a 2-D vector, or the magnitude

of a complex number z = a + bi given the real and imaginary parts.

The algorithm avoids performing the square and square-root operations, instead using simple operations such as comparison, multiplication, and addition. Some choices of the α and β parameters of the algorithm allow the multiplication operation to be reduced to a simple shift of binary digits that is particularly well suited to implementation in high-speed digital circuitry.

The approximation is expressed as

where

is the maximum absolute value of a and b, and

is the minimum absolute value of a and b.

For the closest approximation, the optimum values for

and

are

and

, giving a maximum error of 3.96%.

| Largest error (%) | Mean error (%) | ||

|---|---|---|---|

| 1/1 | 1/2 | 11.80 | 8.68 |

| 1/1 | 1/4 | 11.61 | 3.20 |

| 1/1 | 3/8 | 6.80 | 4.25 |

| 7/8 | 7/16 | 12.50 | 4.91 |

| 15/16 | 15/32 | 6.25 | 3.08 |

| 3.96 | 2.41 |

Improvements[edit]

When

,

becomes smaller than

(which is geometrically impossible) near the axes where

is near 0.

This can be remedied by replacing the result with

whenever that is greater, essentially splitting the line into two different segments.

Depending on the hardware, this improvement can be almost free.

Using this improvement changes which parameter values are optimal, because they no longer need a close match for the entire interval. A lower

and higher

can therefore increase precision further.

Increasing precision: When splitting the line in two like this one could improve precision even more by replacing the first segment by a better estimate than

, and adjust

and

accordingly.

| Largest error (%) | ||||

|---|---|---|---|---|

| 1 | 0 | 7/8 | 17/32 | −2.65% |

| 1 | 0 | 29/32 | 61/128 | +2.4% |

| 1 | 0 | 0.898204193266868 | 0.485968200201465 | ±2.12% |

| 1 | 1/8 | 7/8 | 33/64 | −1.7% |

| 1 | 5/32 | 27/32 | 71/128 | 1.22% |

| 127/128 | 3/16 | 27/32 | 71/128 | −1.13% |

Beware however, that a non-zero

would require at least one extra addition and some bit-shifts (or a multiplication), probably nearly doubling the cost and, depending on the hardware, possibly defeat the purpose of using an approximation in the first place.

See also[edit]

- Hypot, a precise function or algorithm that is also safe against overflow and underflow.

References[edit]

External links[edit]

of a complex number

of a complex number

is the maximum absolute value of a and b, and

is the maximum absolute value of a and b, and  is the minimum absolute value of a and b.

is the minimum absolute value of a and b. and

and  are

are  and

and  , giving a maximum error of 3.96%.

, giving a maximum error of 3.96%.

,

,  becomes smaller than

becomes smaller than

Recent Comments